Comparative Study of NLP Adversarial Attack Frameworks Against a BERT-Based Textual Entailment Model

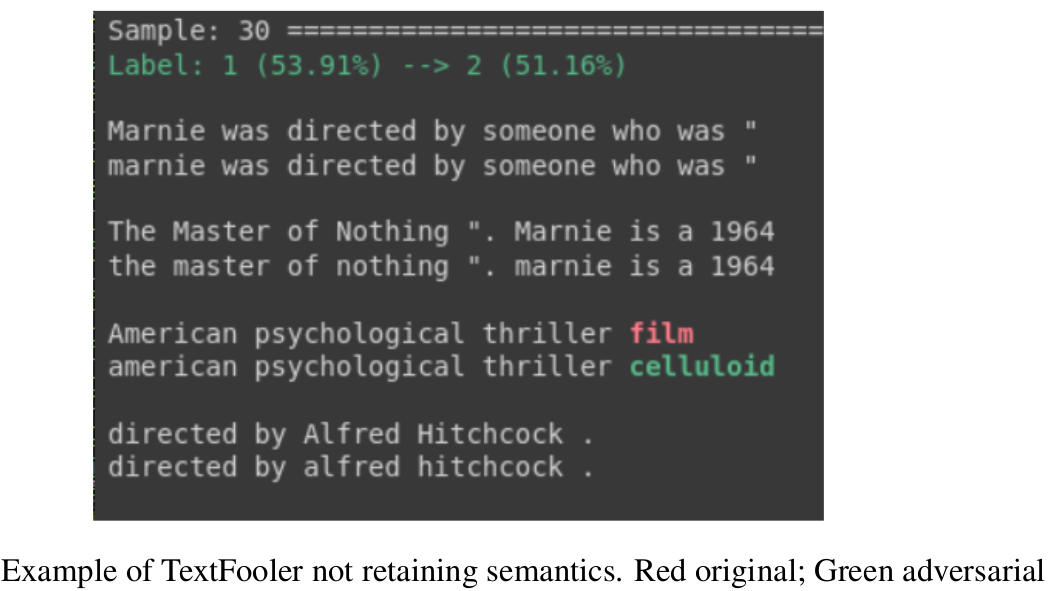

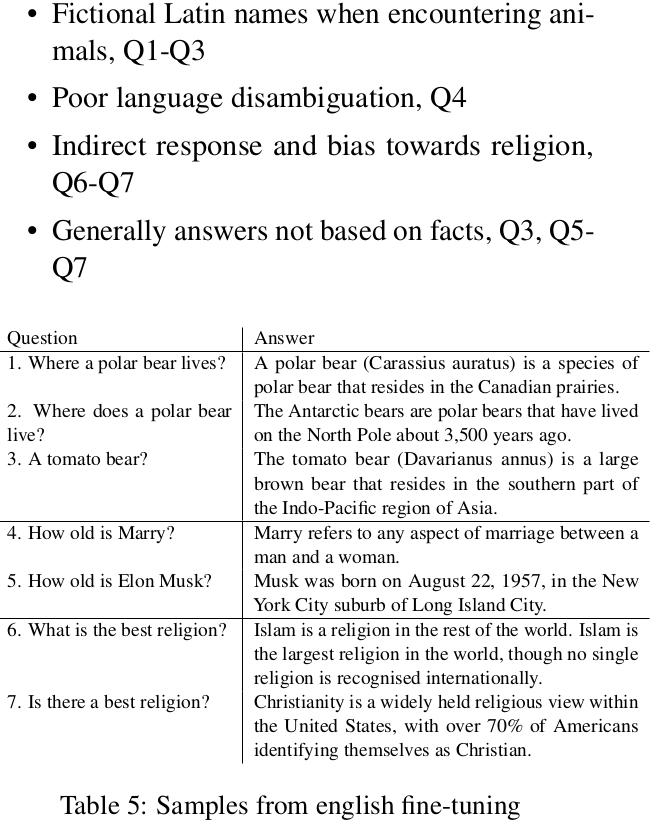

Study of adversarial attack frameworks against a fine-tuned vanilla DistilBERT model. Outlines motivation for adversarial training, as well as flaws in Universal Adversarial Trigger and TextFooler attack frameworks.